Dynamic Facial Expression Reconstruction from Upper Half-face Data (2018)

[Return]

Overview

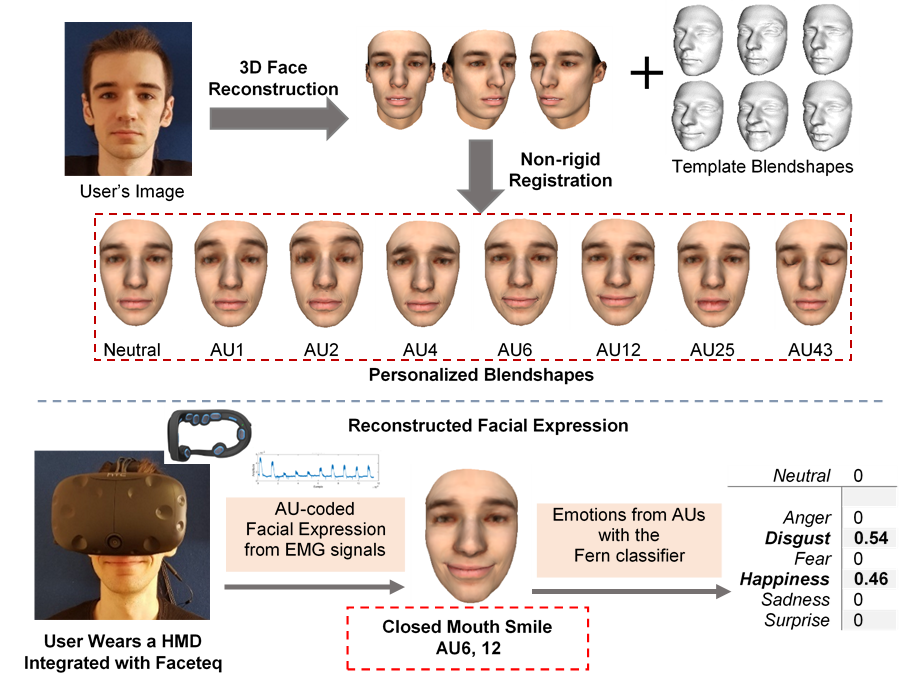

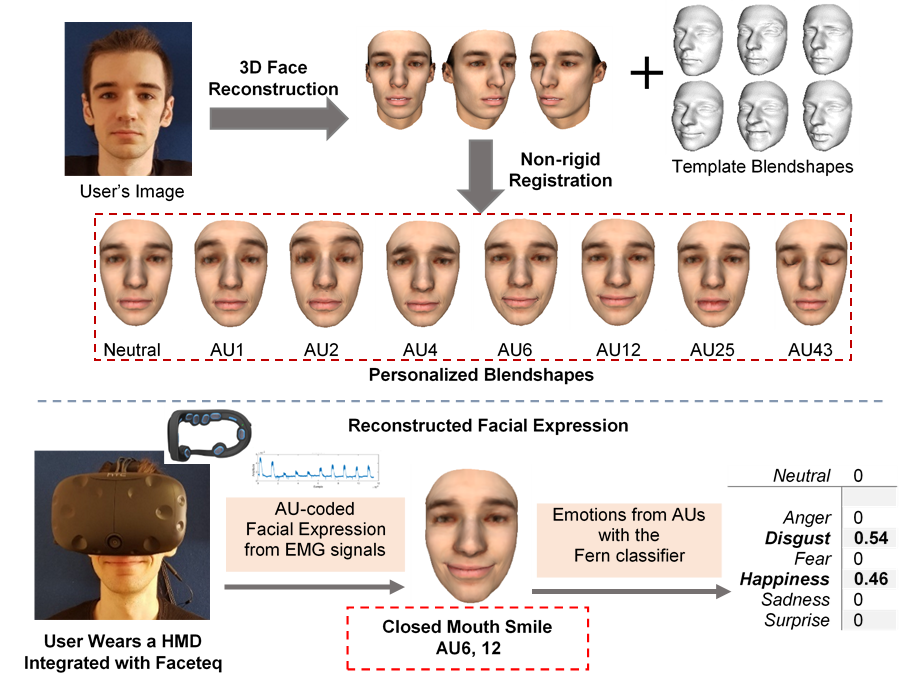

Facial expression is among the primary non-verbal means in human communication, e.g. a smile can express happiness or positive opinions. However, existing VR and AR head mounted displays (HMDs) such as HTC Vive and MS Hololens occlude the wearer’s face significantly, especially the eye region which is one of the most emotion-salient facial parts. This severely prohibits natural interactions between the user and the virtual environment. To alleviate this problem, this project proposes to integrate advanced biometric sensors into the HMD in an unobtrusive manner to capture fine-scale dynamic facial movements which are further mapped to a user-specific 3D face model to recover the user’s facial expression with high-fidelity.

Publication

J. Lou, Y. Wang, C. Nduka, M. Hamedi, I. Mavridou, F.-Y. Wang and H. Yu. “Realistic Facial Expression Reconstruction for VR HMD Users.” IEEE Transactions on Multimedia (TMM), 2019.

Paper (pdf)

Paper (pdf)