Social Interaction: A Cognitive-neurosciences Approach (2009 - 2011)

[Return]

Overview

The project is funded by the Economic and Social Research council of UK. Social interaction is the basis of most human activities. Through social interactions people make judgments about their partner’s social identity, emotional state, attractiveness and trustworthiness. Psychologists convincingly argue that many of these basic social judgements are made automatically rather than as the result of conscious decision. Yet, little is known about the detailed cognitive-neural mechanisms that support the judgments. This project aims to elucidate these mechanisms using the most up-to-date experimental, computational and brain imaging techniques.

Dynamic 3D Facial Expression Reconstruction and Analysis

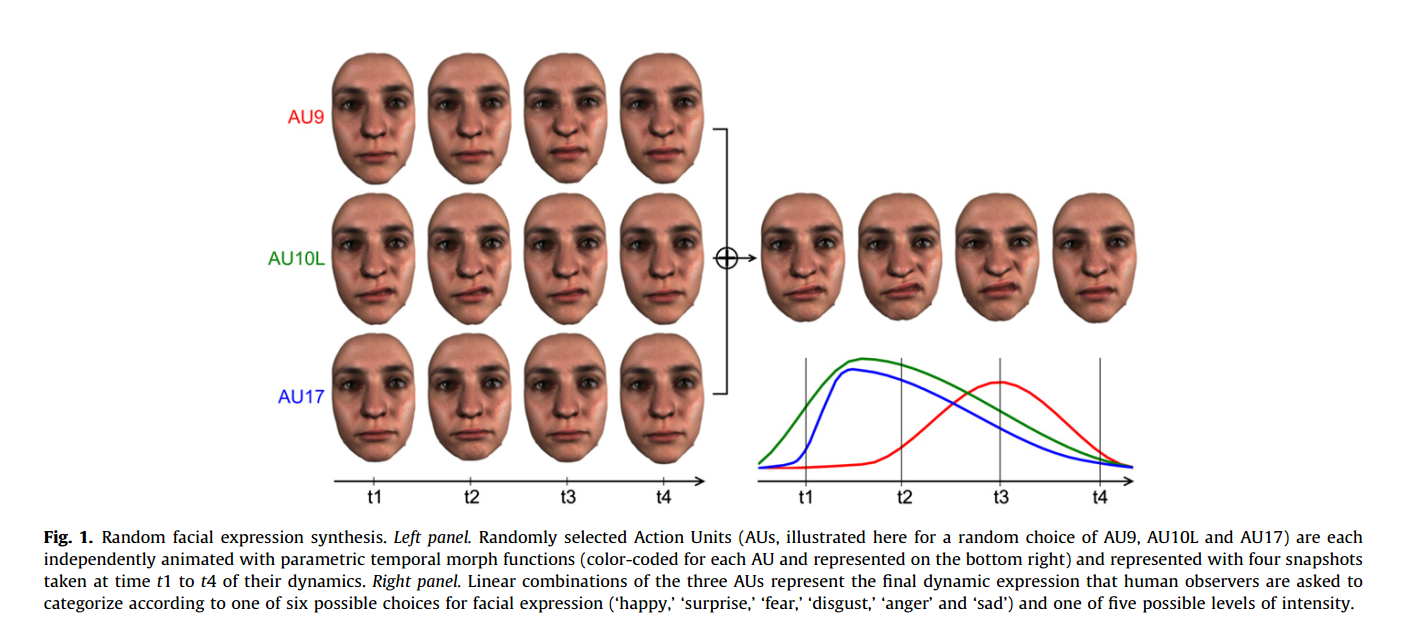

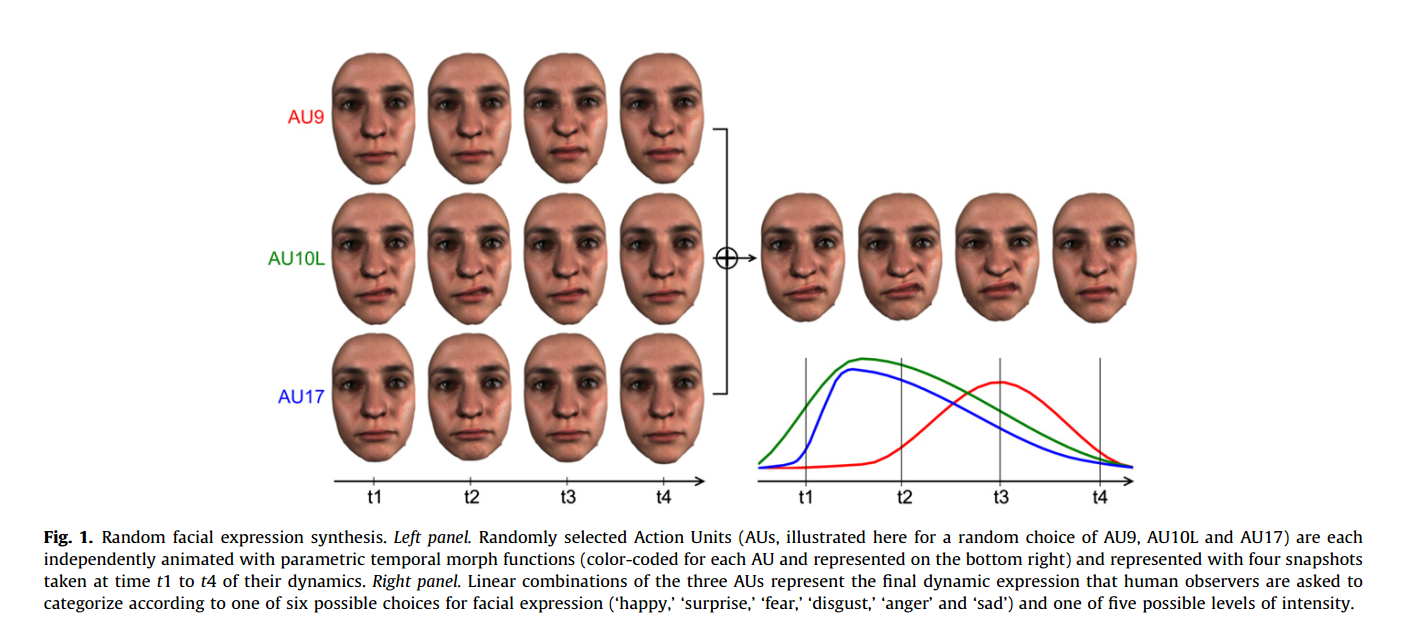

This project investigates novel methods for flexibly synthesizing any arbitrary meaningful dynamic 3D facial expression in the absence of actor performance data for that expression. With techniques from computer graphics, we synthesized random arbitrary dynamic facial expression animations. The synthesis was controlled by parametrically modulating Action Units (AUs) taken from the Facial Action Coding System (FACS). We presented these to human observers and instructed them to categorize the animations according to one of six possible facial expressions. With techniques from human psychophysics, we modeled the internal representation of these expressions for each observer, by extracting from the random noise the perceptually relevant expression parameters. We validated these models of facial expressions with naive observers.

Cross-cultural Investigation of 4D Facial Expressions

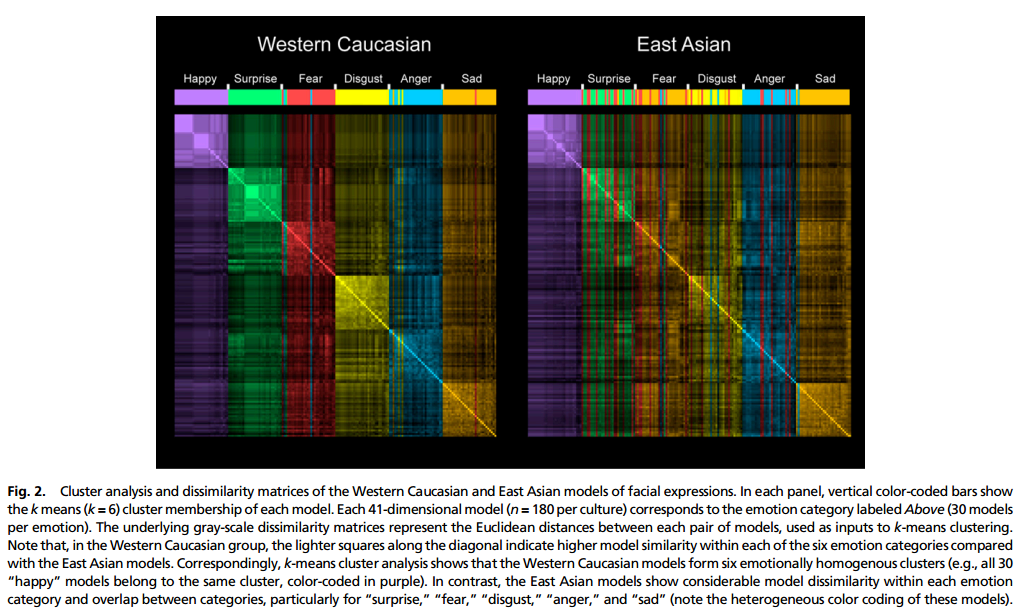

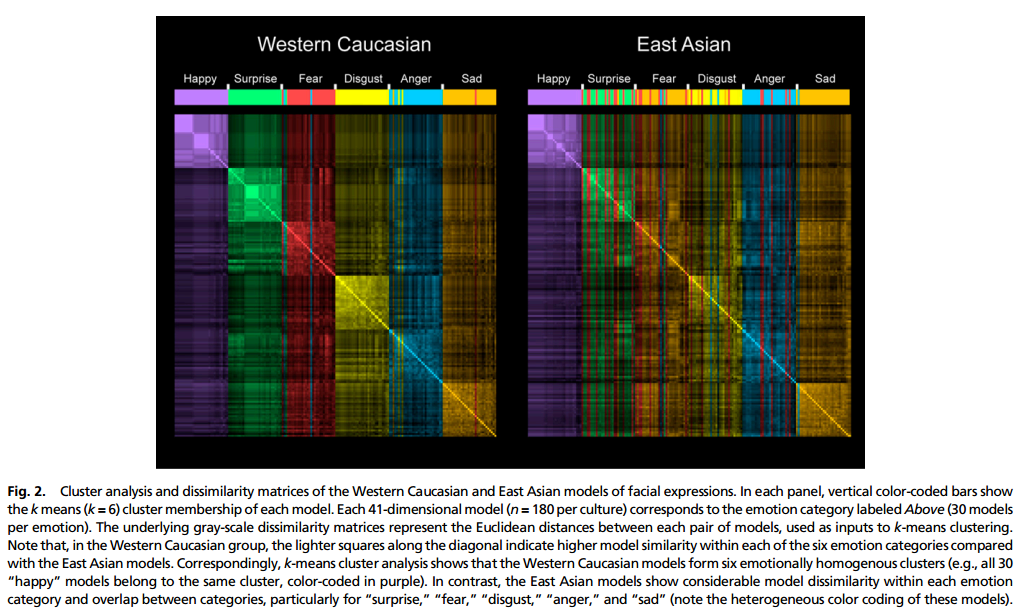

Since Darwin’s seminal works, the universality of facial expressions of emotion has remained one of the longest standing debates in the biological and social sciences. Here, we refute this assumed universality. Using a unique computer graphics platform that combines generative grammars with visual perception, we accessed the mind’s eye of 30 Western and Eastern culture individuals and reconstructed their mental representations of the six basic facial expressions of emotion. Crosscultural comparisons of the mental representations challenge universality on two separate counts. By refuting the long-standing universality hypothesis, our data highlight the powerful influence of culture on shaping basic behaviors once considered biologically hardwired. Consequently, our data open a unique nature-nurture debate across broadfields from evolutionary psychology and social neuroscience to social networking via digital avatars.

Publication

Yu, Hui, Oliver GB Garrod, and Philippe G. Schyns. “Perception-driven facial expression synthesis.” Computers & Graphics 36, no. 3 (2012): 152-162.

Paper (pdf)

Jack, Rachael E., Oliver GB Garrod, Hui Yu, Roberto Caldara, and Philippe G. Schyns. “Facial expressions of emotion are not culturally universal.” Proceedings of the National Academy of Sciences 109, no. 19 (2012): 7241-7244.

Paper (pdf)

Paper (pdf)

Paper (pdf)